TFNN

The TFNN project has turned into SynthNet – you can read more about it here.

TFNN, short for “Temporal Frame Neural Network”, is a very on-going artificial intelligence research project I started back a number of years ago. The ultimate goal is a true, functional model of the biological neural network in software. While this is an incredibly lofty goal, the project serves as more of a learning opportunity for me (and anyone else interested).

TFNN, short for “Temporal Frame Neural Network”, is a very on-going artificial intelligence research project I started back a number of years ago. The ultimate goal is a true, functional model of the biological neural network in software. While this is an incredibly lofty goal, the project serves as more of a learning opportunity for me (and anyone else interested).

Currently, the Temporal Frame Neural Network demonstrates the following abilities:

- Associative Learning (Via Hebbian Plasticity)

- Non-Associative Learning (Habituation and Sensitivity)

- Increased or decreased transmitter effectiveness via virtual neuromodulators

- Connectivity via axodendritic, axosomatic, and axoaxonic synaptic connections

- Cell growth and death due to virtual neurotrophins

- Geographic representation of neural network, allowing for spatial dependent connections

- Parallel functionality, allowing for a more accurate simulation

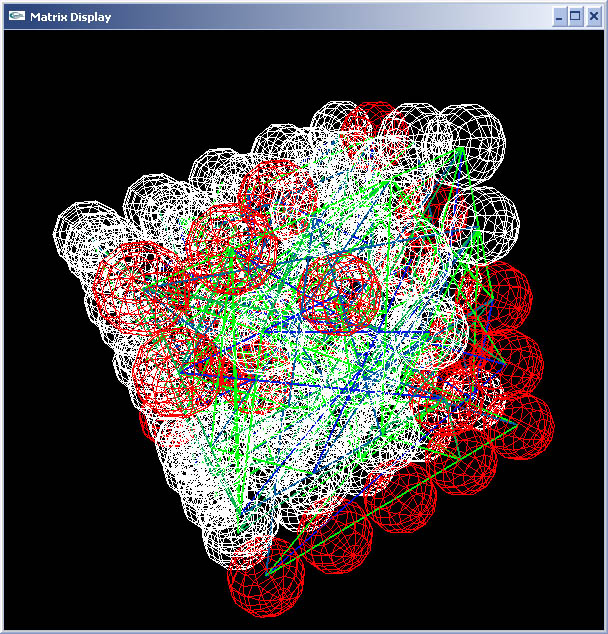

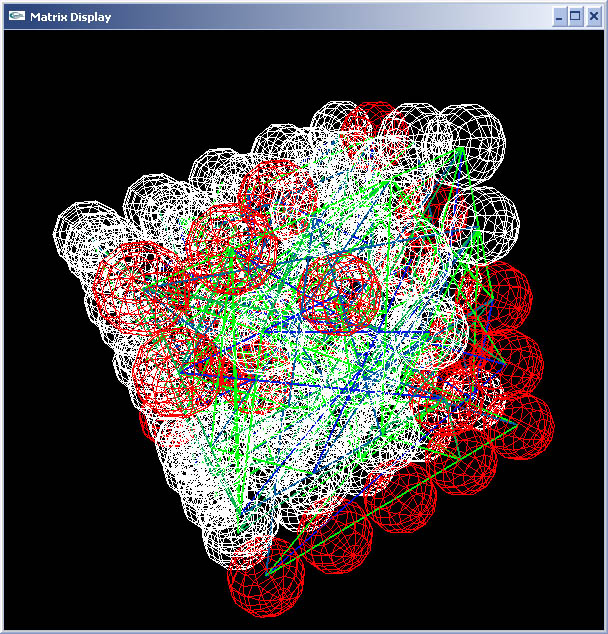

- A “visual fMRI” engine to display activity within a specific matrix

Some (very lofty) things left to do:

- Include more accurate support for neurotransmitters and neuromodulators with specific behaviors to each other

- Functionality to grow neural pathways as dictated by virtual DNA

- Engine to take real DNA data (sea slug, etc) and convert to virtual DNA

- Include ability to take advantage of multiple core processors

Again, this is a learning adventure for me, so if you have any knowledge or ideas to contribute, please don’t hesitate.

Hello - and thanks for visiting my site! I maintain ToniWestbrook.com to share information and projects with others with a passion for applying computer science in creative ways. Let's make the world a better and more beautiful place through computing! | More about Toni »

Hello - and thanks for visiting my site! I maintain ToniWestbrook.com to share information and projects with others with a passion for applying computer science in creative ways. Let's make the world a better and more beautiful place through computing! | More about Toni »

TFNN, short for “Temporal Frame Neural Network”, is a very on-going artificial intelligence research project I started back a number of years ago. The ultimate goal is a true, functional model of the biological neural network in software. While this is an incredibly lofty goal, the project serves as more of a learning opportunity for me (and anyone else interested).

TFNN, short for “Temporal Frame Neural Network”, is a very on-going artificial intelligence research project I started back a number of years ago. The ultimate goal is a true, functional model of the biological neural network in software. While this is an incredibly lofty goal, the project serves as more of a learning opportunity for me (and anyone else interested).