Though I have fun working on SynthNet and other projects at night, during the day I fill the role of mild-mannered network administrator at the Manchester-Boston Regional Airport (actually, the day job is quite a bit of fun as well). One of the ongoing projects I’ve taken on is adding all of our various Intranet-oriented services into a single platform for central management, easier use, and cost effectiveness. As mentioned in a previous article (linked to below, see NMS Integration), I knew Drupal was the right candidate for the job, simply due to the sheer number of modules available for a wide array of functionality, paired with constant patching and updates from the open source community. We needed a versatile, sustainable solution that was completely customizable but wasn’t going to break the bank.

Though I have fun working on SynthNet and other projects at night, during the day I fill the role of mild-mannered network administrator at the Manchester-Boston Regional Airport (actually, the day job is quite a bit of fun as well). One of the ongoing projects I’ve taken on is adding all of our various Intranet-oriented services into a single platform for central management, easier use, and cost effectiveness. As mentioned in a previous article (linked to below, see NMS Integration), I knew Drupal was the right candidate for the job, simply due to the sheer number of modules available for a wide array of functionality, paired with constant patching and updates from the open source community. We needed a versatile, sustainable solution that was completely customizable but wasn’t going to break the bank.

The Mission

The goal of our Drupal Intranet site was to provide the following functionality:

- PDF Document Management System

- Categorization, customized security, OCR

- Desktop integrated uploads

- Integration with asset management system

- Asset Management System

- Inventory database

- Barcode tracking

- Integration with our NMS (Zenoss)

- Integration with Document Management System (connect item with procurement documents such as invoices and purchase orders)

- Automated scanning/entry of values for computer-type assets (CPU/Memory/HD Size/MAC Address/etc)

- Physical network information (For network devices, switch and port device is connected to)

- For network switches, automated configuration backups

- Article Knowledgebase (categorization, customized security)

- Help Desk (ticketing, email integration, due dates, ownership, etc)

- Public Address System integration (Allow listening to PA System)

- Active Directory Integration (Users, groups, and security controlled from Windows AD)

- Other non-exciting generic databases (phone directories, etc)

Implementation

Amazingly enough, the core abilities of Drupal covered the vast majority of the required functionality out of the box. By making use of custom content types with CCK fields, Taxonomy, Views, and Panels, the typical database functionality (entry, summary table listings, sorting, searching, filtering, etc) of the above items was reproduced easily. However, specialized modules and custom coding was necessary for the following parts:

- Customized Security – Security was achieved for the most part via Taxonomy Access Control and Content Access. TAC allowed us to control access to content based on user roles and categorization of said content (e.g. a user who was a member of the “executive staff” role would have access to documents with a specific taxonomy field set to “sensitive information”, whereas other users would not). Additionally, Content Access allows you to further refine access down to the specific node level, so each document can have individual security assigned to it.

- OCR – This was the one of the few areas we chose to delve into a commercial product. While there are some open source solutions out there, some of the commercial engines are still considerably more accurate, including the one we choose, ABBYY. They make a Linux version of the software that can be driven via the shell. With a little custom coding, we have the ABBYY software running on each PDF upload, turning it into an indexed PDF. A preview of the document is shown in flash format by first creating a swf version (using pdf2swf), then using FlexPaper/SWF Tools.

- Linking Documents – This was performed with node references and the Node Reference Explorer module, allowing a user friendly popup dialogs to choose the content to link to.

- Desktop Integration – Instead of going through the full steps of creating a new node each time, choosing a file to upload, filling in fields, etc, we wanted the user to be able to right click a PDF file on their desktop, and select “Send To -> Document Archive” from Windows. For this, we did end up doing a custom .NET application that established an HTTP connection to the Drupal site and POSTed the files to it. Design of this application is an article in itself (maybe soon!).

- Barcoding – This was the last place we used a commercial product simply due to the close integration with our barcode printers (Zebra) – we wanted to stick with the ZebraDesigner product. However, one of the options in the product is to accept the ID of the barcode from an outside source (text/xml/etc), so this was simply a matter of having Drupal put the appropriate ID of the current hardware item into a file and automating ZebraDesigner to open and print it.

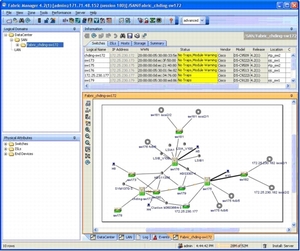

- NMS (Zenoss) Integration – The article of how we accomplished this can be found here.

- Automated Switch Configuration Backups and Network Tracking – This just took a little custom coding and was not as difficult as it might seem. Once all our network switches were entered into the asset management system and we had each IP address, during the Drupal cron hook, we had the module cURL the config via the web interface of the switch by feeding it a SHOW STARTUP-CONFIG command (e.g. http://IP/level/15/exec/-/show/startup-config/CR) – which was saved and attached to the node. Additionally, we grabbed the MAC database off each switch (SHOW MAC-ADDRESS-TABLE), and parsed that, comparing the MAC addresses on each asset to each switch port, and recording the switch/port location into each asset. We could now see where each device on the network was connected. A more detailed description of the exact process used for this may also be a future article.

- Help Desk – While this could have been accomplished with a custom content type and views, we chose to make use of the Support Ticketing Module, as it had some added benefits (graphs, email integration, etc)

- Public Address System – Our PA system can generate ICECast streams of its audio. We picked these up using the FFMp3 flash MP3 Live Stream Player.

- Automated Gathering of Hardware Info – For this, we made use of a free product called WinAudit loaded into the AD login scripts. WinAudit will take a full accounting of pretty much everything on a computer (hardware, software, licenses, etc) and dump them to a csv/xml file. We have all our AD machines taking audit during logins, then dumping these files to a central location for Drupal to update the asset database during the cronjob.

- Active Directory Integration – The first step was to ensure the apache server itself was a domain member, which we accomplished through the standard samba/winbind configurations. We then setup the PAM Authentication module which allowed the Drupal login to make use of the PHP PAM package, which ultimately allows it to use standard Linux PAM authentication – which once integrated into AD, includes all AD accounts/groups. A little custom coding was also done to ensure matching Drupal roles were created for each AD group a user was a part of – allowing us to control access with Drupal (see #1 above) via AD groups.

There was a liberal dose of code within a custom module to glue some of the pieces together in a clean fashion, but overall the system works really smoothly, even with heavy use. And the best part is, it consists of mainly free software, which is awesome considering how much we would have paid had we gone completely commercial for everything.

Please feel free to shoot me any specific questions about functionality if you have them – there were a number of details I didn’t want to bog the article down with, but I’d be happy to share my experiences.